Environment:

OS: OEL 7.4

Memory: 6GB

HDD: 120

Nodes: rac01.localdomain, rac02.localdomain

Install Oracle database preinstall package and oracleasm-support package via yum.

yum install oracle-database-server-12cR2-preinstall.x86_64 oracleasm-support.x86_64

On both nodes:

Change oracle os user password.

passwd oracle

avahi-daemon should not be running. Make sure you stop the service and disable on startup

systemctl disable avahi-daemon

systemctl stop avahi-daemon

Disable selinux

modify SELINUX=enforcing to SELINUX=disabled on selinux configuration file.

Stop and disable firewalld

systemctl stop firewalld

systemctl disable firewalld

Configure /etc/hosts with following settings

cat >> /etc/hosts

192.168.100.101 rac01.localdomain rac01

192.168.100.102 rac02.localdomain rac02

192.168.1.101 rac01-priv.localdomain rac01-priv

192.168.1.102 rac02-priv.localdomain rac02-priv

192.168.100.201 rac01-vip.localdomain rac01-vip

192.168.100.202 rac02-vip.localdomain rac02-vip

192.168.100.203 rac-scan.localdomain rac-scan

192.168.100.204 rac-scan.localdomain rac-scan

192.168.100.205 rac-scan.localdomain rac-scan

Create directory for grid and database software.

mkdir -p /u01/app/12.2.0/grid

mkdir -p /u01/app/oracle/product/12.2.0/dbhome_1

chown -R oracle:oinstall /u01

Extract the grid source on the first node only. Oracle_grid_12cR2.zip is the compressed source for grid.

cd /media/sf_Shared_loc/Oracle/

ls

unzip Oracle_grid_12cR2.zip -d /u01/app/12.2.0/grid/

chown -R oracle:oinstall /u01

Install cvuqdisk-1.0.10-1.rpm on both nodes.

rpm -ivh cvuqdisk-1.0.10-1.rpm

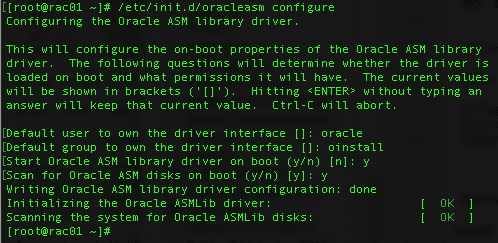

Configure oracleasm on both the node.

/etc/init.d/oracleasm configure

Create partition on newly attached storage disk.

fdisk /dev/sdb

Dump partition with sfdisk and implement partition configuration to other disks.

sfdisk -d /dev/sdb | sfdisk /dev/sdc

sfdisk -d /dev/sdb | sfdisk /dev/sdd

sfdisk -d /dev/sdb | sfdisk /dev/sde

sfdisk -d /dev/sdb | sfdisk /dev/sdf

sfdisk -d /dev/sdb | sfdisk /dev/sdg

sfdisk -d /dev/sdb | sfdisk /dev/sdh

sfdisk -d /dev/sdb | sfdisk /dev/sdi

sfdisk -d /dev/sdb | sfdisk /dev/sdj

sfdisk -d /dev/sdb | sfdisk /dev/sdk

Verify newly created partitions.

ls /dev/sd*

Create asmdisk on new partitions.

/etc/init.d/oracleasm createdisk ASMDISK01 /dev/sdb1

/etc/init.d/oracleasm createdisk ASMDISK02 /dev/sdc1

/etc/init.d/oracleasm createdisk ASMDISK03 /dev/sdd1

/etc/init.d/oracleasm createdisk ASMDISK04 /dev/sde1

/etc/init.d/oracleasm createdisk ASMDISK05 /dev/sdf1

/etc/init.d/oracleasm createdisk ASMDISK06 /dev/sdg1

/etc/init.d/oracleasm createdisk ASMDISK07 /dev/sdh1

/etc/init.d/oracleasm createdisk ASMDISK08 /dev/sdi1

/etc/init.d/oracleasm createdisk ASMDISK09 /dev/sdj1

/etc/init.d/oracleasm createdisk ASMDISK10 /dev/sdk1

Verify ASM Disks on both nodes.

/etc/init.d/oracleasm listdisks

/etc/init.d/oracleasm scandisks

/etc/init.d/oracleasm listdisks

Remount /dev/shm and make the size to 8G

mount -oremount,size=8G tmpfs /dev/shm

I have encountered following error. Increasing the size of tmpfs fixed the issue.

[main] [ 2018-02-04 12:19:54.588 NPT ] [UsmcaLogger.logException:186] SEVERE:method oracle.sysman.assistants.usmca.backend.USMInstance:configureLocalASM

[main] [ 2018-02-04 12:19:54.588 NPT ] [UsmcaLogger.logException:187] ORA-00845: MEMORY_TARGET not supported on this system

[main] [ 2018-02-04 12:19:54.588 NPT ] [UsmcaLogger.logException:188] oracle.sysman.assistants.util.sqlEngine.SQLFatalErrorException: ORA-00845: MEMORY_TARGET not supported on this system

Configure command on the startup.

cat >> /etc/rc.d/rc.local

mount -oremount,size=8G tmpfs /dev/shm

^C

chmod 755 /etc/rc.d/rc.local

Verify DNS configuration before beginning installation.

cat /etc/resolv

nslookup rac01.localdomain

nslookup rac-scan.localdomain

Start grid control installation from first node.

cd /u01/app/12.2.0/grid

./gridSetup.sh

Below is the landing page once installation begins.

Select Configure Oracle Grid Infrastructure ofr a New cluster and Click on Next >.

Select Configure an Oracle Standalone Cluster. Click on Next > .

Modify Scan Name and Cluster Name and Click on Next >.

Click on Add to add additional node details.

Select Add a single node and type second nodes public hostname and Virtual hostname. Click on OK once finished.

Click on ssh connectivity, provide OS Username and OS Password. Click on Setup to configure SSH.

SSH configuration is on progress.

Once SSH is configured properly click on OK to continue.

Verify the Interface, Subnet and Usage details Click on Next > to continue.

Select Configure ASM using block devices. Click on Next > to continue.

Select No for creating a separate ASM Disk for GIMR data. Click on Next > to continue.

Click on change Discovery Path to set the Disk Discovery Path. In our case /dev/oracleasm/disks/* is the path. Click on OK.

Once all the disks are discovered properly Select the desired Disks. Make sure there is sufficient space to store GIMR database. At least 50GB is desired.

Type password for sys and ASMSNMP password. Click on Next > to continue.

Select Do not use Intelligent Platform Management Interface(IPMI) Click on Next > to continue.

Right now there is no EM Cloud Control to register. Simply Click Next > to continue.

Select oinstall for OSASM, OSDBA and OSOPER and click on Next > to continue.

Warning message will be pop uped and click on Yes to continue.

Select the Oracle base and click on Next > to continue.

Verify the content of Oracle Base. Since it is safe to continue, Click on Yes to continue.

Click on Next > to continue.

In my case I want to execute root.sh script manually so I delete the Automatically run configuration scripts and click on Next > to continue.

Minimum RAM required is 8GB so there is Warning regarding the prerequisite condition not meet. Click on Ignore All to ignore all the warnings.

Once Ignore All is select Click on Next > to continue.

Warning message will be popup Click on Yes to continue.

Summary screen will be displayed. Click on Install to begin installation.

Installation is on progress. #####

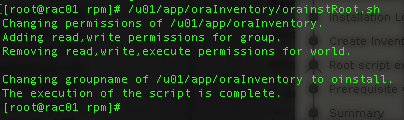

Configuration scrips execution window will be poped up. Execute the script serially. Execute first script on rac01. Once the script execute completely then execute it on rac02.

Once you receive the script completed successfully on rac01 then move on to rac02.

Once scripts get execute on all the RAC nodes Click on OK to continue.

Installation on progress. ##########

Additionally you may click on Details to get another pop up window to view the installation status on detail.

Installation on progress. ####################

Once Installation completes Click on Close to close the installation window.

0 comments:

Post a Comment

Note: only a member of this blog may post a comment.